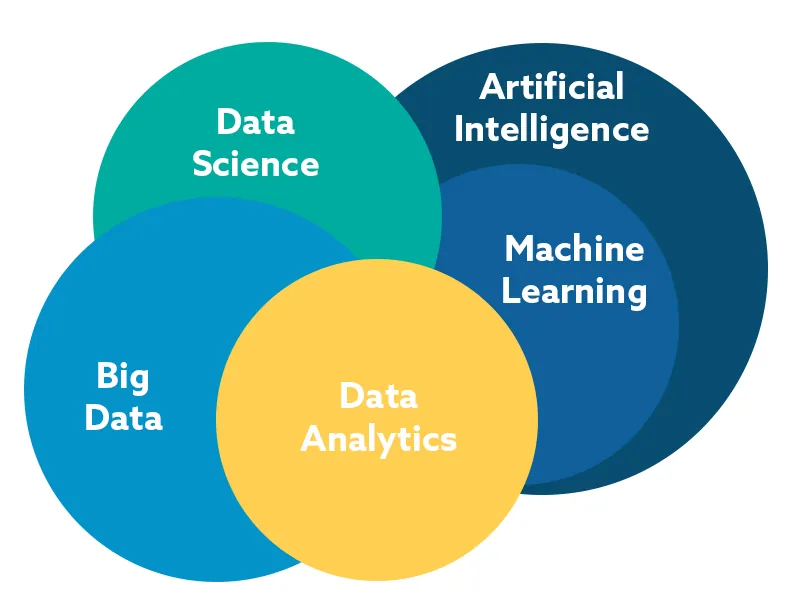

The Role of Big Data in AI and Machine Learning

In today’s promptly progressing concerning details landscape, the crossroads of the generous dossier, machine intelligence (AI), and machine intelligence (ML) are reshaping commerces, forceful novelty, and translating in what way or manner we interact accompanying the globe. As the capacity, speed, and difference of dossier continue to discredit, understanding the important part of the considerable dossier in AI and ML becomes more important.

What is Big Data?

Big Data refers to enormous datasets that are so complex and big that traditional data conversion programs cannot accomplish ruling class capably. These datasets come from differing beginnings, containing friendly television, sensors, transactions, and more. The three V’s—Volume, Velocity, and Variety—are fundamental traits that outline considerable dossier:

Volume: The steep amount of dossier generated all second.

Velocity: The speed at which the dossier is created and treated.

Variety: The different types of Data, containing organized, almost-organized, and unstructured layouts.

The Synergy Between Big Data, AI, and ML

1. Data as Fuel for AI and ML Models

At the heart of AI and ML lies the need for a Data. These electronics depend on abundant datasets to learn patterns, form prognoses, and advance their veracity over the period. The more high-quality dossier possible, the better the models can act. For instance, a machine intelligence model prepared on far-reaching datasets can detect shadings and equivalences that tinier datasets can miss, superior to more reliable and perceptive effects.

2. Enhanced Learning and Generalization

Big Data authorizes AI and ML algorithms to gain diverse models. When models are unprotected from an expansive range of sketches, they improve at generalizing their knowledge to new, hidden dossiers. For example, in figure acknowledgment, having about 1000 concepts across miscellaneous ignition environments and angles allows the model to label objects accompanying extraordinary accuracy.

3. Real-Time Insights and Predictions

The speed of large data admits AI and ML orders to process facts in actual time for action or event. Businesses can influence this capability for the next accountability. For instance, in finance, algorithms can resolve advertise flows and make trades within milliseconds, optimizing methods to establish new dossiers.

4. Personalization and Customer Insights

Big data simplifies a deep understanding of service action. By resolving abundant datasets from customer interplays, trades can form embodied happenings. AI algorithms can divide audiences and envision desires, embellishing shopping designs and customer vindication. This is specifically apparent in principles like Netflix and Amazon, place recommendations are stimulated by refined dossier reasoning.

5. Continuous Improvement and Adaptability

AI and ML models prosper on feedback loops. With a large dossier, these orders can steadily get or give an advantage of new inputs and outcomes, cleansing their algorithms over opportunity. This changeability is critical in fields to degree healthcare, where continuous dossier accumulation leads to enhanced patient effects through more correct diagnostics and situation plans.

Challenges and Considerations

While a considerable dossier offers huge potential for AI and ML, it likewise presents challenges. Issues such as dossier solitude, protection, and moral concerns must be addressed. Additionally, the kind of dossier is superior; weak or partial data can bring about faulty models and unintentional results.